[ad_1]

Final December, Sébastien Stormacq wrote about the availability of a distributed map point out for AWS Action Features, a new attribute that permits you to orchestrate massive-scale parallel workloads in the cloud. Which is when Charles Burton, a details techniques engineer for a firm identified as CyberGRX, discovered out about it and refactored his workflow, minimizing the processing time for his machine learning (ML) processing occupation from 8 days to 56 minutes. Ahead of, managing the job demanded an engineer to constantly keep track of it now, it operates in significantly less than an hour with no support necessary. In addition, the new implementation with AWS Move Functions Dispersed Map prices fewer than what it did at first.

What CyberGRX achieved with this option is a fantastic example of what serverless technologies embrace: permitting the cloud do as a great deal of the undifferentiated weighty lifting as feasible so the engineers and facts scientists have more time to concentrate on what is critical for the enterprise. In this case, that suggests continuing to increase the product and the processes for one particular of the important offerings from CyberGRX, a cyber chance evaluation of third events utilizing ML insights from its significant and increasing database.

What’s the business problem?

CyberGRX shares 3rd-bash cyber possibility (TPCRM) data with their consumers. They predict, with superior self-assurance, how a third-party organization will answer to a chance assessment questionnaire. To do this, they have to operate their predictive product on every single company in their platform they at the moment have predictive info on additional than 225,000 corporations. Anytime there’s a new corporation or the details variations for a firm, they regenerate their predictive product by processing their total dataset. In excess of time, CyberGRX details scientists improve the model or insert new features to it, which also requires the product to be regenerated.

The obstacle is functioning this career for 225,000 corporations in a timely manner, with as couple hands-on sources as achievable. The career operates a established of functions for each firm, and every firm calculation is independent of other businesses. This usually means that in the excellent circumstance, just about every business can be processed at the exact same time. Having said that, utilizing such a substantial parallelization is a demanding problem to solve.

Very first iteration

With that in brain, the business constructed their initially iteration of the pipeline utilizing Kubernetes and Argo Workflows, an open up-resource container-native workflow motor for orchestrating parallel careers on Kubernetes. These ended up instruments they were acquainted with, as they were being presently making use of them in their infrastructure.

But as before long as they tried to operate the task for all the companies on the platform, they ran up towards the limitations of what their process could manage competently. Since the alternative depended on a centralized controller, Argo Workflows, it was not sturdy, and the controller was scaled to its utmost capacity for the duration of this time. At that time, they only experienced 150,000 businesses. And jogging the task with all of the companies took about 8 days, during which the procedure would crash and will need to be restarted. It was extremely labor intensive, and it usually needed an engineer on call to observe and troubleshoot the occupation.

The tipping level arrived when Charles joined the Analytics workforce at the beginning of 2022. Just one of his 1st jobs was to do a full model operate on roughly 170,000 firms at that time. The product run lasted the entire week and ended at 2:00 AM on a Sunday. That is when he decided their technique essential to evolve.

Next iteration

With the agony of the final time he ran the model fresh new in his head, Charles considered via how he could rewrite the workflow. His to start with believed was to use AWS Lambda and SQS, but he understood that he required an orchestrator in that resolution. Which is why he selected Stage Capabilities, a serverless company that can help you automate procedures, orchestrate microservices, and build facts and ML pipelines furthermore, it scales as essential.

Charles bought the new edition of the workflow with Move Features operating in about 2 weeks. The 1st stage he took was adapting his existing Docker impression to operate in Lambda using Lambda’s container graphic packaging format. Simply because the container currently worked for his info processing responsibilities, this update was simple. He scheduled Lambda provisioned concurrency to make absolutely sure that all capabilities he essential were being ready when he started off the work. He also configured reserved concurrency to make confident that Lambda would be equipped to tackle this maximum variety of concurrent executions at a time. In order to assist so a lot of capabilities executing at the very same time, he lifted the concurrent execution quota for Lambda for every account.

And to make positive that the actions had been run in parallel, he applied Stage Capabilities and the map point out. The map point out permitted Charles to run a set of workflow techniques for every single product in a dataset. The iterations run in parallel. Simply because Stage Features map condition offers 40 concurrent executions and CyberGRX desired a lot more parallelization, they established a resolution that introduced many point out devices in parallel in this way, they have been in a position to iterate speedy across all the firms. Creating this advanced resolution, needed a preprocessor that taken care of the heuristics of the concurrency of the technique and break up the input data throughout multiple point out machines.

This next iteration was presently much better than the first a person, as now it was in a position to end the execution with no difficulties, and it could iterate around 200,000 companies in 90 minutes. Nevertheless, the preprocessor was a really intricate part of the technique, and it was hitting the restrictions of the Lambda and Phase Functions APIs thanks to the amount of money of parallelization.

3rd and remaining iteration

Then, throughout AWS re:Invent 2022, AWS declared a distributed map for Step Functions, a new style of map state that permits you to produce Stage Capabilities to coordinate big-scale parallel workloads. Utilizing this new aspect, you can conveniently iterate above tens of millions of objects stored in Amazon Basic Storage Assistance (Amazon S3), and then the distributed map can launch up to 10,000 parallel sub-workflows to course of action the data.

When Charles browse in the News Web site posting about the 10,000 parallel workflow executions, he instantly thought about attempting this new state. In a few of months, Charles crafted the new iteration of the workflow.

Due to the fact the distributed map condition break up the input into distinct processors and dealt with the concurrency of the distinctive executions, Charles was equipped to fall the complicated preprocessor code.

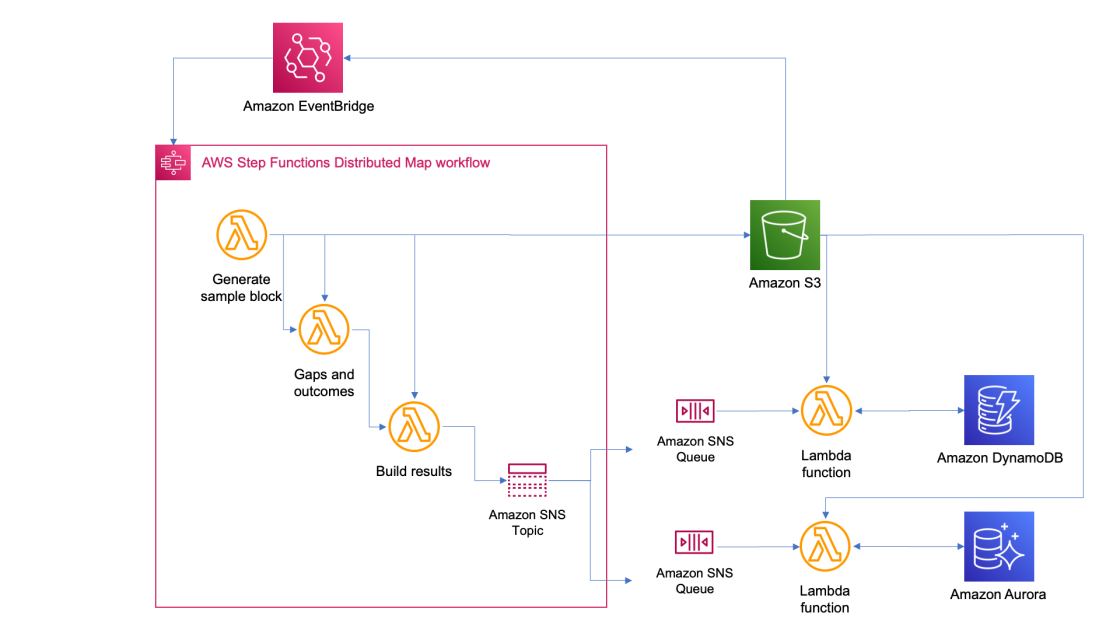

The new system was the simplest that it is at any time been now anytime they want to operate the task, they just add a file to Amazon S3 with the enter details. This action triggers an Amazon EventBridge rule that targets the condition device with the distributed map. The state equipment then executes with that file as an input and publishes the results to an Amazon Easy Notification Support (Amazon SNS) subject.

What was the impact?

A several weeks soon after finishing the third iteration, they had to operate the task on all 227,000 companies in their platform. When the occupation concluded, Charles’ group was blown away the full method took only 56 minutes to comprehensive. They estimated that all through these 56 minutes, the occupation ran additional than 57 billion calculations.

The adhering to image reveals an Amazon CloudWatch graph of the concurrent executions for one Lambda functionality all through the time that the workflow was functioning. There are virtually 10,000 capabilities functioning in parallel in the course of this time.

Simplifying and shortening the time to run the occupation opens a great deal of possibilities for CyberGRX and the info science staff. The advantages commenced appropriate away the second one of the facts experts desired to run the occupation to exam some enhancements they experienced made for the design. They had been in a position to run it independently devoid of necessitating an engineer to assistance them.

And, for the reason that the predictive design itself is just one of the vital offerings from CyberGRX, the enterprise now has a more competitive products considering the fact that the predictive evaluation can be refined on a daily basis.

Understand additional about making use of AWS Move Functions:

You can also verify the Serverless Workflows Collection that we have available in Serverless Land for you to examination and find out more about this new functionality.

— Marcia

[ad_2]

Source link